A Spring Boot and MongoDB application story

UPDATE : there's a follow up to this post here, where we migrate this application to Spring Webflux and the new CosmosDB SQL SDK to go full reactive, and study the pros/cons of this migration.

This blog post comes from a discussion with a client, who uses JHipster extensively to generate Spring Boot microservices, using MongoDB databases. As he is an Azure customer, we had several questions:

- Can he host his whole architecture "as a service"? The Spring Boot applications would be automatically managed and scaled by Azure Web Apps, as well as his databases, using CosmosDB. That means a lot less work, stress and issues for him!

- CosmosDB supports the MongoDB API, but how good is this support? Can he just use his current applications with CosmosDB?

- What is the performance of CosmosDB, and what is the expected price?

So I created a sample Spring Boot + MongoDB application, available at https://github.com/jdubois/jhipster-cosmosdb-mongodb. This application is build with JHipster and uses one of our sample models, called the "bug tracker", and which is available here.

Migrating from MongoDB to CosmosDB

Our application isn't trivial: it has several collections, with relationships between them. However, all those API calls are supported by CosmosDB, so migrating to CosmosDB is extremely simple: just use the correct connection string, and that's all!

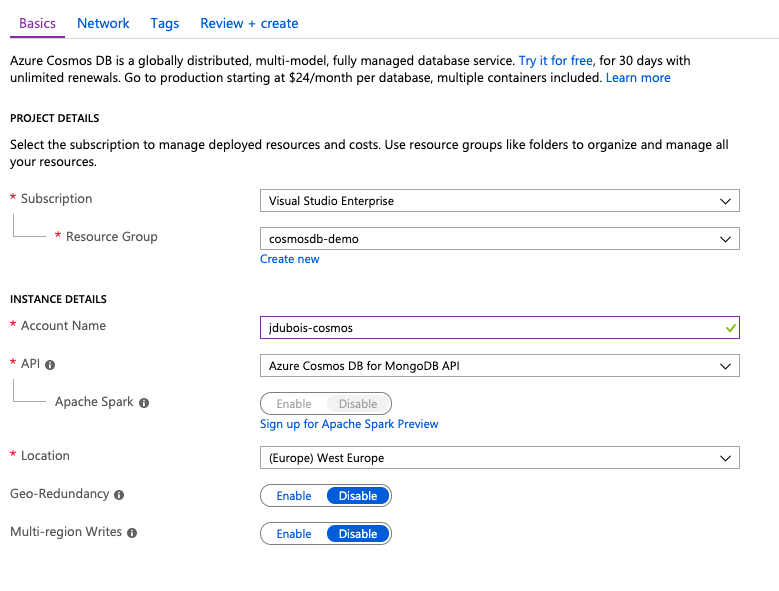

First I created a CosmosDB instance using the Azure Portal, and made sure it used the MongoDB API:

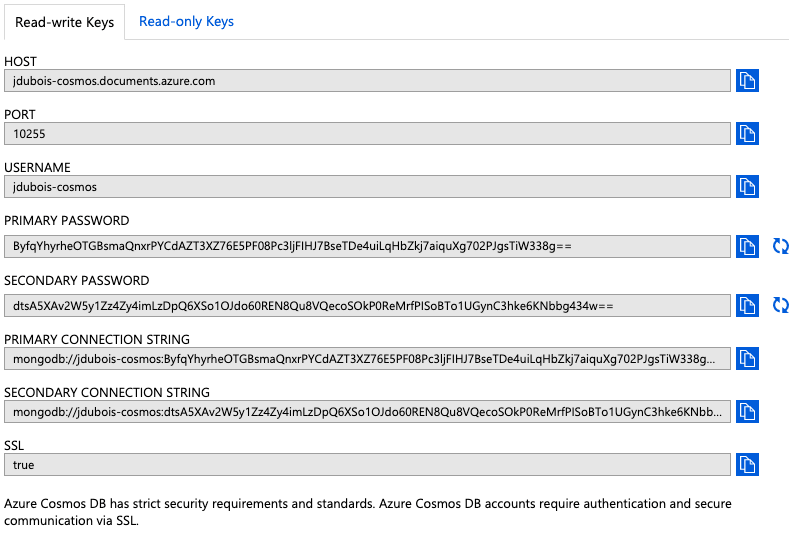

Then I selected that instance to get the connection string to my CosmosDB instance:

And all I needed to do, in this commit, was to use that connection string with Spring Boot.

Please note that we use Spring Data MongoDB here, and that it works perfectly fine with CosmosDB.

Number of collections and pricing considerations

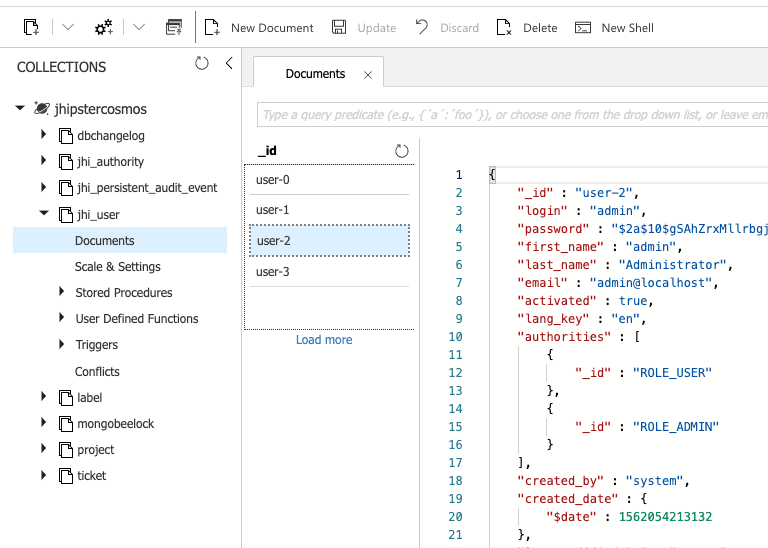

The generated database has 8 collections:

Please note that 2 of those collections, dbchangelog and mongobeelock are used by JHipster to update the database automatically (if you come from the SQL database world, you might know Liquibase which does the same thing). Also, we have 2 collections created by JHipster, jhi_authority and jhi_persistent_audit_event, which are not 100% useful.

With CosmosDB, you can either pay by collection or by database: the default configuration is to pay by collection, and it is also recommended if you don't have pricing issues, as it will help your application scale better. But in that case, the 4 collections we have just noticed, which aren't really useful, will cost you money.

So if you migrate from MongoDB to CosmosDB, one of the important things to work on is the number of collections: only keep the ones that are truly useful, or this will cost you money for no business value.

Deploying to Azure Web Apps

As explained on the JHipster documentation, this is just a matter of adding the right plugin, which is done in this commit.

We are using here Azure Web Apps as we want to have fully-managed applications. Please note that this allows to "scale up" (= use a bigger server) or "scale out" (= add more servers on the fly), and we will use that later option to scale without coding anything.

First tests, with 100 users

It was surprisingly easy to deploy our application, but does it scale well? For this, let's use the Gatling load testing tool, as JHipster automatically generated a load testing configuration in src/test/gatling.

We'll focus on the Product entity:

- As soon as the entity is used, CosmosDB will generate a collection for it. Be careful, as by default it will have 1,000 RU/s, so it costs some money.

- This entity also has an issue: it is not paginated! Pagination is useful from a business perspective (if we have 10,000 projects, showing them all to the end-user is not possible), but also from a performance point of view. As we have a limited number of request units per second, let's not waste them by requesting too many data! So we limited the request to 20 projects, in this commit.

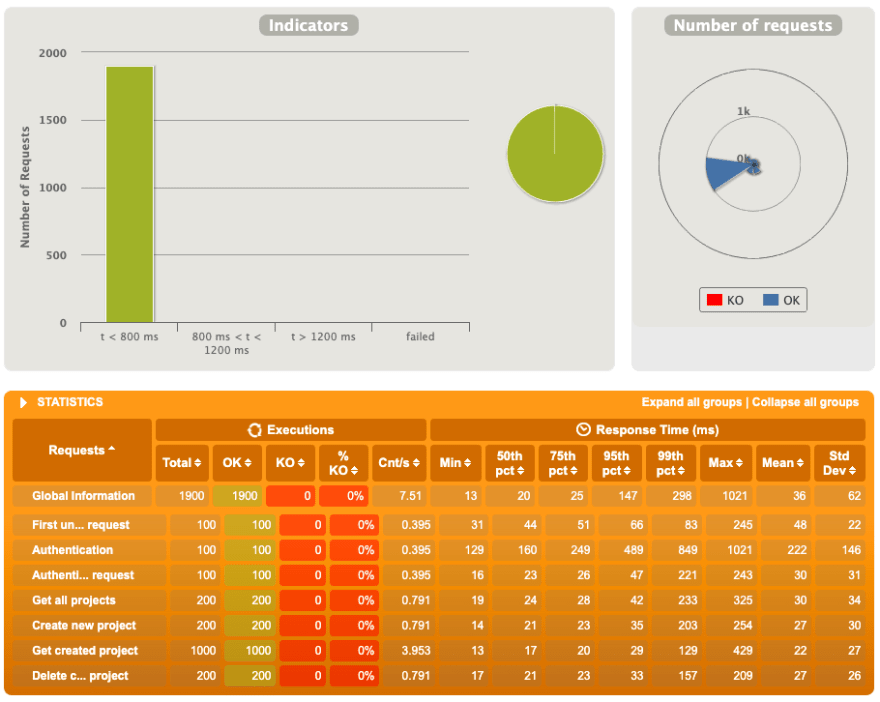

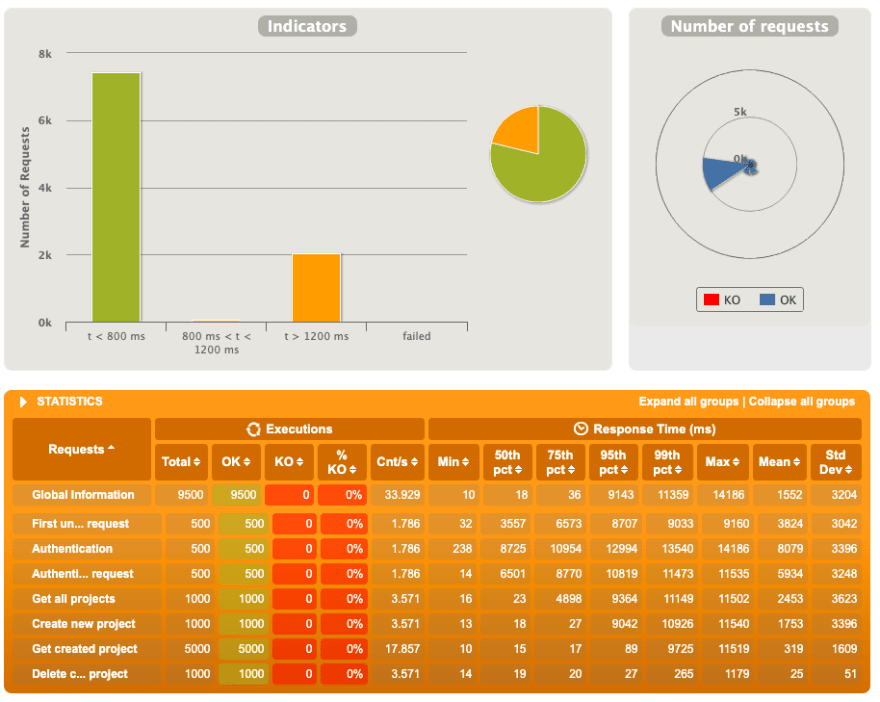

Our first test uses only 100 concurrent users, and we had a peak at 21 request/second. This isn't a big load, so everything went fine:

Going to 500 users

As the first test was too simple, we decided to push the system to 500 users. Everything went well, and we got a peak at 136 requests/second, but the application started to show some issues.

In fact, the system is having some slow requests because the authentication process is taking too much time :

Reaching 1,000 users

To go higher, we decided to upgrade our RU/seconds to 5,000 for the authentication collections and the Project collection. That's the great thing about paying per collection: you can choose which collection you want to prioritize, depending on your business needs.

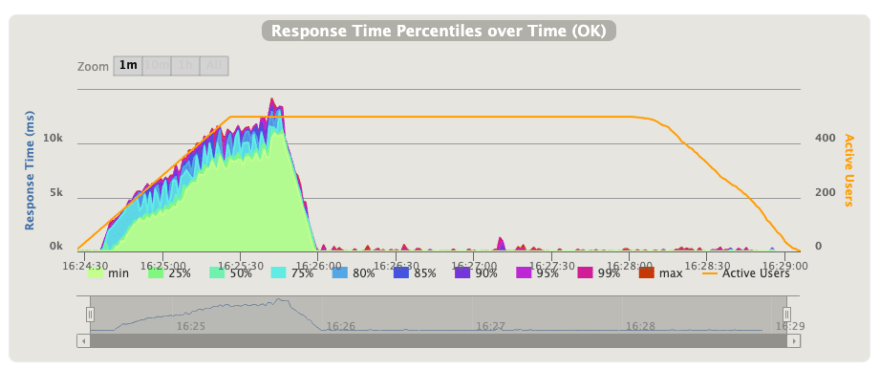

This allows us to reach 1,000 users, but we still have the authentication issue, probably because this process is too costly for just one Web application instance. That's why the first requests are slow, and then we go much faster, with a peak at 308 requests/second :

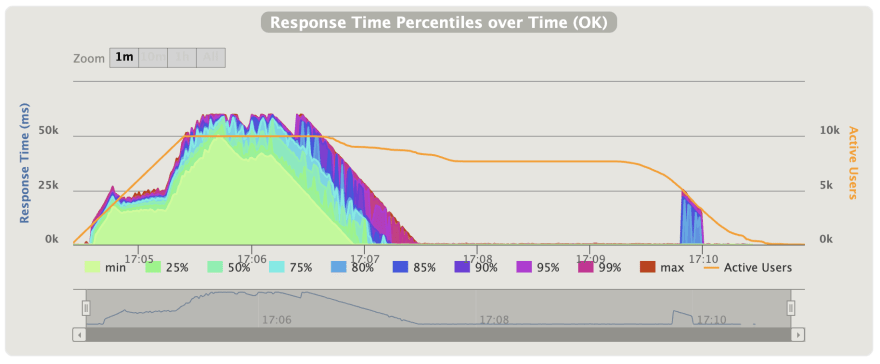

Up to 10,000 users

With the previous configuration, it was impossible to go above 2,500 users, as the application server wasn't able to handle that load. The good thing with Azure Web App is that you can use it to automatically scale your Web application, using a simple screen :

This solves the authentication issue, which needs some processing power, as we share it across several servers. This also allows us to support more concurrent requests.

Using this setup, we are able to reach 10,000 active users :

Also, we had a peak at 1,029 requests/seconds :

However, we had 2% of errors here, as we reached 10,000 users too fast for the system to handle all of them correctly.

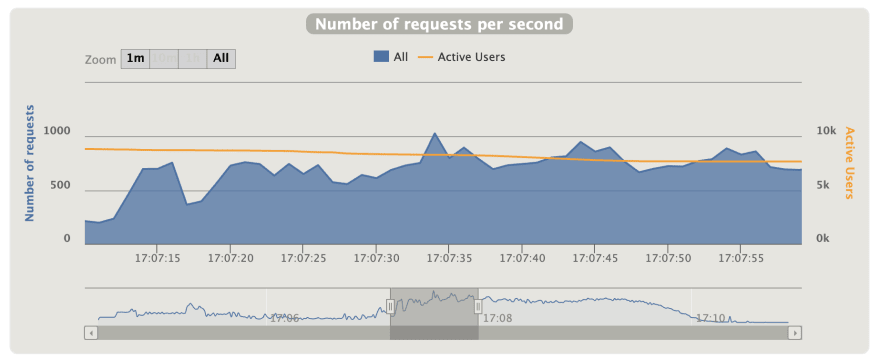

Reaching 900 request/second without any issue

As our issue here is the authentication process, and that only used raw Web application power, we did a similar test with 1,000 users again, but this time doing 10 times more requests each. And here we have a very smooth and stable application, which consistently delivers 900 requests/second :

Conclusions and thoughts

Our tests show that:

- Running CosmosDB with the MongoDB API works very well at the API level, and is totally able to scale to handle the load

- Azure Web Apps allows to host and scale easily Spring Boot applications

We have gone from 100 to 10,000 users without much trouble, excepted that we had to increase the number of request units for CosmosDB, and configure auto-scaling for Azure Web Apps. This allowed us to keep using Spring Boot and Spring Data MongoDB as usual: both have developer-friendly, concise API that many people love. If we needed to, we could probably have gone further and handle even more users, as long as we are ready to pay for it: the good news is that scalability works well and seems to be linear.

Top comments (1)

This is a really comprehensive and thorough write-up Julien!