New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Azure App service sticky session #1302

Comments

|

Issue moved from dotnet/aspnetcore#33440

From @BrennanConroy on Thursday, June 10, 2021 8:02:23 PM What is a browser fan? What version of Blazor are you using? |

|

Issue moved from dotnet/aspnetcore#33440

From @Zquiz on Thursday, June 10, 2021 8:09:11 PM It happens in all browsers that the users have available. So that's Chrome, Edge, Firefox all fully updated. Edit. |

|

Issue moved from dotnet/aspnetcore#33440

From @BrennanConroy on Thursday, June 10, 2021 8:19:43 PM Can you gather client side logs to see why the client disconnected |

|

Issue moved from dotnet/aspnetcore#33440

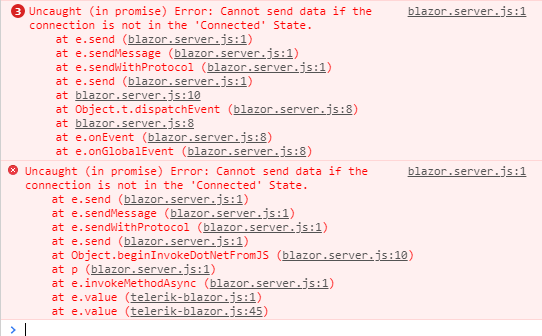

From @Zquiz on Friday, June 11, 2021 11:31:09 AM Yeah sure. |

|

Issue moved from dotnet/aspnetcore#33440

From @BrennanConroy on Friday, June 11, 2021 5:05:25 PM Ideally SignalR only, but we can probably parse out the other logs if it's not too bad. Also, debug or trace logs please. |

|

Issue moved from dotnet/aspnetcore#33440

From @Zquiz on Monday, June 14, 2021 3:25:10 PM Sorry for the late delay.

|

|

@Zquiz when you're using Azure SignalR service, App Service side "ARR affinity" and "Web Socket" settings are not required. And if you're enabling auto-scale, when server is scale-down, related clients will be affected and disconnected. So it's likely to be an expected behavior. Please check if these things were happening together when you found the issues. |

|

Thanks for the reply. |

|

Silly question. Can this behavior be because of the healthcheck in the app service? |

|

It's not related to app service health check. Client disconnect is by design if related server connection is dropped, like due to auto-scale or some server side connectivity issue. Basically with client auto reconnect logic enabled, the impact in client would be minor. Clients will reconnect and route to a usable server connection. If you do care about client disconnect, please disable auto-scale for stability. |

|

The settings are for local hosted SignalR. When using Azure SignalR service, clients are setup WebSocket connection to Azure SignalR service directly, so the ARR/Web sockets settings is not in use. Would you share me your resourceId to let me(jixin[at]microsoft.com) have a further check? |

|

Offline synced that the issue is not applied to default Blazor Server template created project and should be related to customer's server side logic. So close the issue. |

Issue moved from dotnet/aspnetcore#33440

From @Zquiz on Thursday, June 10, 2021 6:53:05 PM

Hello,

I hope someone can help or just tell me that's how it works. But we have moved our Blazor Serverside project from an on-premise hosted environment to using Azure App service.

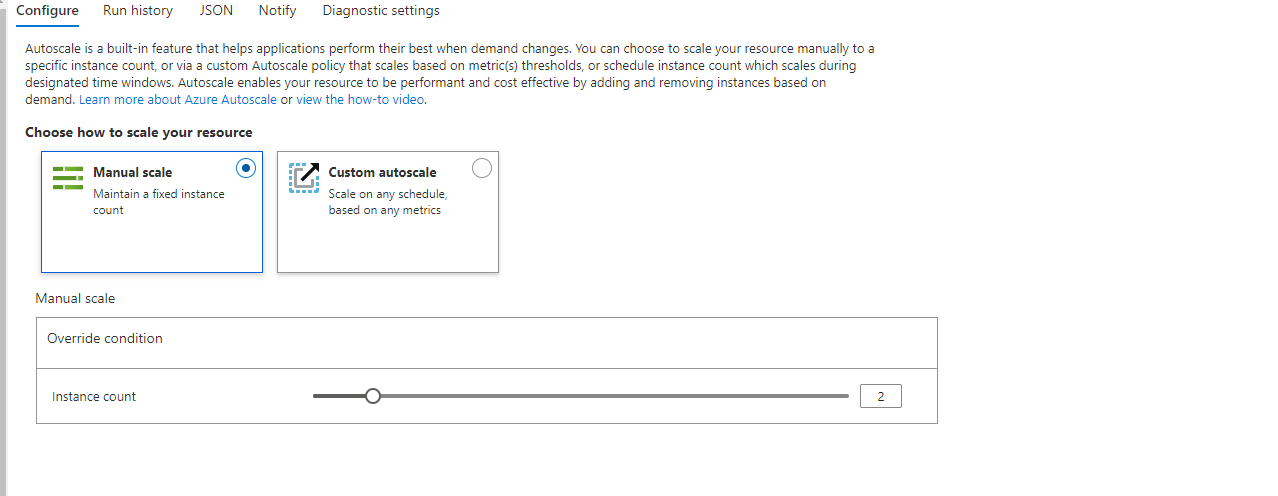

If we try and use these settings

Autoscale when the memory hit x % usage (works)

Always on to true

ARR affinity off

Web sockets ON

Followed this documentation for this

https://docs.microsoft.com/en-us/aspnet/core/blazor/host-and-deploy/server?view=aspnetcore-5.0

We're always using an Azure SignalR Service as recommended by Mircosoft itself for this. Added Microsoft.Azure.SignalR.ServerStickyMode.Required; in our code, so it should be a sticky session.

The issue we're seeing is after 10-15 mins a browser fan will disconnect if the user is not on that fan but working on another

But if we enable ARR affinity cookies. Then it seems like it can negotiate just fine with SignalR. But we lose the effect of making people get onto a new instance if autoscale creates a new instance. So the load won't evenly be distributed.

Is it something we're doing wrong on our end or is it just some missed information for some failsafe built into SignalR?

Bonus info is that on-premise was we using Session sticky session LB and that worked fine for the most time.

Currently, auto-scaling to 9 instances in the morning to even out the load since the ARR cookie will let them stay on the instance for the whole workday.

Documentation I have been following

https://docs.microsoft.com/en-us/aspnet/core/signalr/publish-to-azure-web-app?view=aspnetcore-5.0

https://docs.microsoft.com/en-us/aspnet/core/blazor/host-and-deploy/server?view=aspnetcore-5.0

https://docs.microsoft.com/en-us/aspnet/core/signalr/publish-to-azure-web-app?view=aspnetcore-5.0

The text was updated successfully, but these errors were encountered: