- Home

- Education Sector

- Educator Developer Blog

- How to make machine learning models interpretable: A seminar series at UC Berkeley

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

On September 9th, 10th and 11th, 2019, we organized a few seminars at the University of California, Berkeley around the topic of Ethics in AI. Specifically, we shared a few insights and practical demos on how to make machine learning models interpretable and accessible with Azure Machine Learning. In the next few paragraphs, we summarize the most important aspects presented during our seminars:

- How to interpret your model

- Interpretability during training

- Interpretability at inferencing time

1. How to interpret your model

When it comes to predictive modeling, you have to make a trade-off: do you just want to know what is predicted? For example, the probability that a customer will churn or how effective some drug will be for a patient. Or do you want to know why the prediction was made and possibly pay for the interpretability with a drop in predictive performance?

In this section we briefly explain how you can explain why your model made the predictions it did with the various interpretability packages of the Azure Machine Learning Python SDK.

During the training phase of the development cycle, model designers and evaluators can use interpretability output of a model to verify hypotheses and build trust with stakeholders. They also use the insights into the model for debugging, validating model behavior matches their objectives, and to check for bias.

In machine learning, features are the data fields used to predict a target data point. For example, to predict credit risk, data fields for age, account size, and account age might be used. In this case, age, account size, and account age are features. Feature importance tells you how each data field affected the model’s predictions. For example, age may be heavily used in the prediction while account size and age don’t affect the prediction accuracy significantly. This process allows data scientists to explain resulting predictions, so that stakeholders have visibility into what data points are most important in the model.

Using these tools, you can explain machine learning models globally on all data, or locally on a specific data point using the state-of-art technologies in an easy-to-use and scalable fashion.

The interpretability classes are made available through multiple SDK packages. Learn how to install SDK packages for Azure Machine Learning.

-

azureml.explain.model, the main package, containing functionalities supported by Microsoft.

-

azureml.contrib.explain.model, preview, and experimental functionalities that you can try.

-

azureml.train.automl.automlexplainer package for interpreting automated machine learning models.

You can apply the interpretability classes and methods to understand the model’s global behavior or specific predictions. The former is called global explanation and the latter is called local explanation.

The methods can be also categorized based on whether the method is model agnostic or model specific. Some methods target certain type of models. For example, SHAP’s tree explainer only applies to tree-based models. Some methods treat the model as a black box, such as mimic explainer or SHAP’s kernel explainer. The explain package leverages these different approaches based on data sets, model types, and use cases.

The output is a set of information on how a given model makes its prediction, such as:

- Global/local relative feature importance

- Global/local feature and prediction relationship

There are two sets of explainers: Direct Explainers and Meta Explainers in the SDK.

Direct explainers come from integrated libraries. The SDK wraps all the explainers so that they expose a common API and output format. If you are more comfortable directly using these explainers, you can directly invoke them instead of using the common API and output format. The following table lists the direct explainers available in the SDK:

Meta explainers automatically select a suitable direct explainer and generate the best explanation info based on the given model and data sets. The meta explainers leverage all the libraries (SHAP, LIME, Mimic, etc.) that we have integrated or developed. The following are the meta explainers available in the SDK:

- Tabular Explainer: Used with tabular datasets.

- Text Explainer: Used with text datasets.

- Image Explainer: Used with image datasets.

2. Interpretability during training

To initialize an explainer object, you need to pass your model and some training data to the explainer's constructor. You can also optionally pass in feature names and output class names (if doing classification) which will be used to make your explanations and visualizations more informative. Here is how to instantiate an explainer object using TabularExplainer, MimicExplainer, and PFIExplainer locally. TabularExplainer is calling one of the three SHAP explainers underneath (TreeExplainer, DeepExplainer, or KernelExplainer), and is automatically selecting the most appropriate one for your use case. You can however, call each of its three underlying explainers directly:

from azureml.explain.model.tabular_explainer import TabularExplainer

# "features" and "classes" fields are optional

explainer = TabularExplainer(model,

x_train,

features=breast_cancer_data.feature_names,

classes=classes)

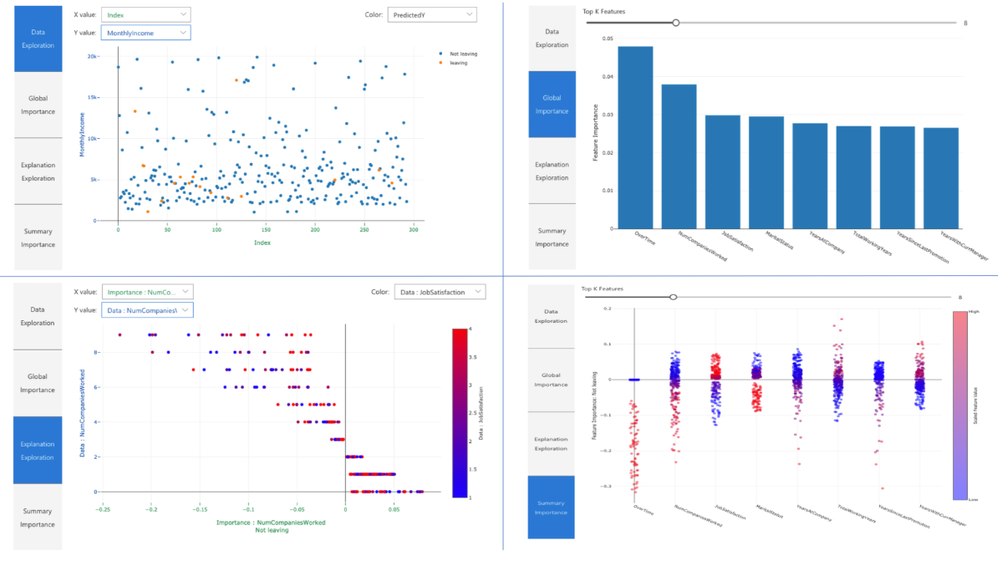

Global visualizations

The following plots provide a global view of the trained model along with its predictions and explanations.

|

Plot |

Description |

|

Data Exploration |

An overview of the dataset along with prediction values. |

|

Global Importance |

Shows the top K (configurable K) important features globally. This chart is useful for understanding the global behavior of the underlying model. |

|

Explanation Exploration |

Demonstrates how a feature is responsible for making a change in model’s prediction values (or probability of prediction values). |

|

Summary |

Uses a signed local feature importance values across all data points to show the distribution of the impact each feature has on the prediction value. |

Local visualizations

You can click on any individual data point at any time of the preceding plots to load the local feature importance plot for the given data point.

|

Plot |

Description |

|

Local Importance |

Shows the top K (configurable K) important features globally. This chart is useful for understanding the local behavior of the underlying model on a specific data point. |

|

Perturbation Exploration |

Allows you to change feature values of the selected data point and observe how those changes will affect prediction value. |

|

Individual Conditional Expectation (ICE) |

Allows you to change a feature value from a minimum value to a maximum value to see how the data point's prediction changes when a feature changes. |

3. Interpretability at inferencing time

The explainer can be deployed along with the original model and can be used at scoring time to provide the local explanation information. We also offer lighter-weight scoring explainers to make interpretability at inferencing time more performant. The process of deploying a lighter-weight scoring explainer is similar to deploying a model and includes the following steps:

- Create an explanation object (e.g., using TabularExplainer):

from azureml.contrib.explain.model.tabular_explainer import TabularExplainer

explainer = TabularExplainer(model,

initialization_examples=x_train,

features=dataset_feature_names,

classes=dataset_classes,

transformations=transformations)

- Create a scoring explainer using the explanation object:

from azureml.contrib.explain.model.scoring.scoring_explainer import KernelScoringExplainer, save

# create a lightweight explainer at scoring time

scoring_explainer = KernelScoringExplainer(explainer)

# pickle scoring explainer

# pickle scoring explainer locally

OUTPUT_DIR = 'my_directory'

save(scoring_explainer, directory=OUTPUT_DIR, exist_ok=True)

- Configure and register an image that uses the scoring explainer model:

# register explainer model using the path from ScoringExplainer.save - could be done on remote compute

# scoring_explainer.pkl is the filename on disk, while my_scoring_explainer.pkl will be the filename in cloud storage

run.upload_file('my_scoring_explainer.pkl', os.path.join(OUTPUT_DIR, 'scoring_explainer.pkl'))

scoring_explainer_model = run.register_model(model_name='my_scoring_explainer',

model_path='my_scoring_explainer.pkl')

print(scoring_explainer_model.name, scoring_explainer_model.id, scoring_explainer_model.version, sep = '\t')

To see the full tutorial we presented at UC Berkeley, see Model interpretability with Azure Machine Learning. To see a collection of Jupyter notebooks that demonstrate the instructions above for different data science scenarios, see the Azure Machine Learning Interpretability sample notebooks.

To learn more, you can also read the following articles and notebooks:

- Azure Machine Learning Notebooks: aka.ms/AzureMLServiceGithub

- Azure Machine Learning Service: aka.ms/AzureMLservice

- Get started with Azure ML: aka.ms/GetStartedAzureML

- Automated Machine Learning Documentation: https://aka.ms/AutomatedMLDocs

- What is Automated Machine Learning? https://aka.ms/AutomatedML

- Model Interpretability with Azure ML Service: https://aka.ms/AzureMLModelInterpretability

- Azure Notebooks: https://aka.ms/AzureNB

- Python Microsoft: https://aka.ms/PythonMS

- Azure ML for VS Code: aka.ms/AzureMLforVSCode

- Automated and Interpretable Machine Learning: https://medium.com/microsoftazure/automated-and-interpretable-machine-learning-d07975741298

- Interpretable Machine Learning Book by Christoph Molnar: https://christophm.github.io/interpretable-ml-book/

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.